Motivation

We currently track extractor messages by resource - for a dataset or file, the user can view a list of Extractor events that were associated with that file.

The list of events is further categorized by extractor, but this can still be inadequate for tracking multiple jobs running on the same extractor.

The problem is exacerbated exponentially if multiple replicas or threads are running for a particular extractor - this means that 2 jobs can simultaneously be sending updates for the same extractor + file or dataset combination, and makes it impossible to determine which jobs sent which updates.

In addition to resolving the problem stated above, expanding extractor job tracking would also allow extractor developers to better debug and analyze their extractor jobs, or to determine if there are performance enhancements that could be made.

Current Behavior

API

The API defines the ExtractorInfo and Extraction models. The MongoDBExtractorService can be used to create and interact with those models within MongoDB.

On Startup

The API for file uploads includes an optional call to the RabbitMqPlugin.

If the RabbitMQ plugin is enabled, Clowder subscribes to RabbitMQ to receive heartbeat messages from each extractor. Clowder subscribes to these messages to determine if an extractor is still online and functioning. (This is the ExtractorInfo model.)

When a heartbeat is discovered and/or an extractor is registered manually via the API, Clowder subscribes to a "reply queue" to receive updates back about the extractor.

On Upload

Each new file upload will push an event into the appropriate extractor queue(s) in RabbitMQ based on the types defined by each extractor.

When a reply comes back containing a file + extractor combo, Clowder creates a new Extraction in the database housing this message.

pyClowder

On Startup

pyClowder includes, among other utilities, a client for subscribing to the appropriate queues in RabbitMQ. It knows how to automatically send back heartbeat signals and status updates to Clowder.

When a pyClowder-based extractor starts up, it begins sending heartbeat messages to RabbitMQ indicating the status of the extractor. Clowder subscribes to these messages to determine if an extractor is still online and functioning. (This is the ExtractorInfo model.)

On Upload

TBD

If an extractor is idle and sees a new message in the queue it is watching, it will grab the message

When a reply comes back containing a file + extractor combo, Clowder creates a new Extraction in the database housing this message.

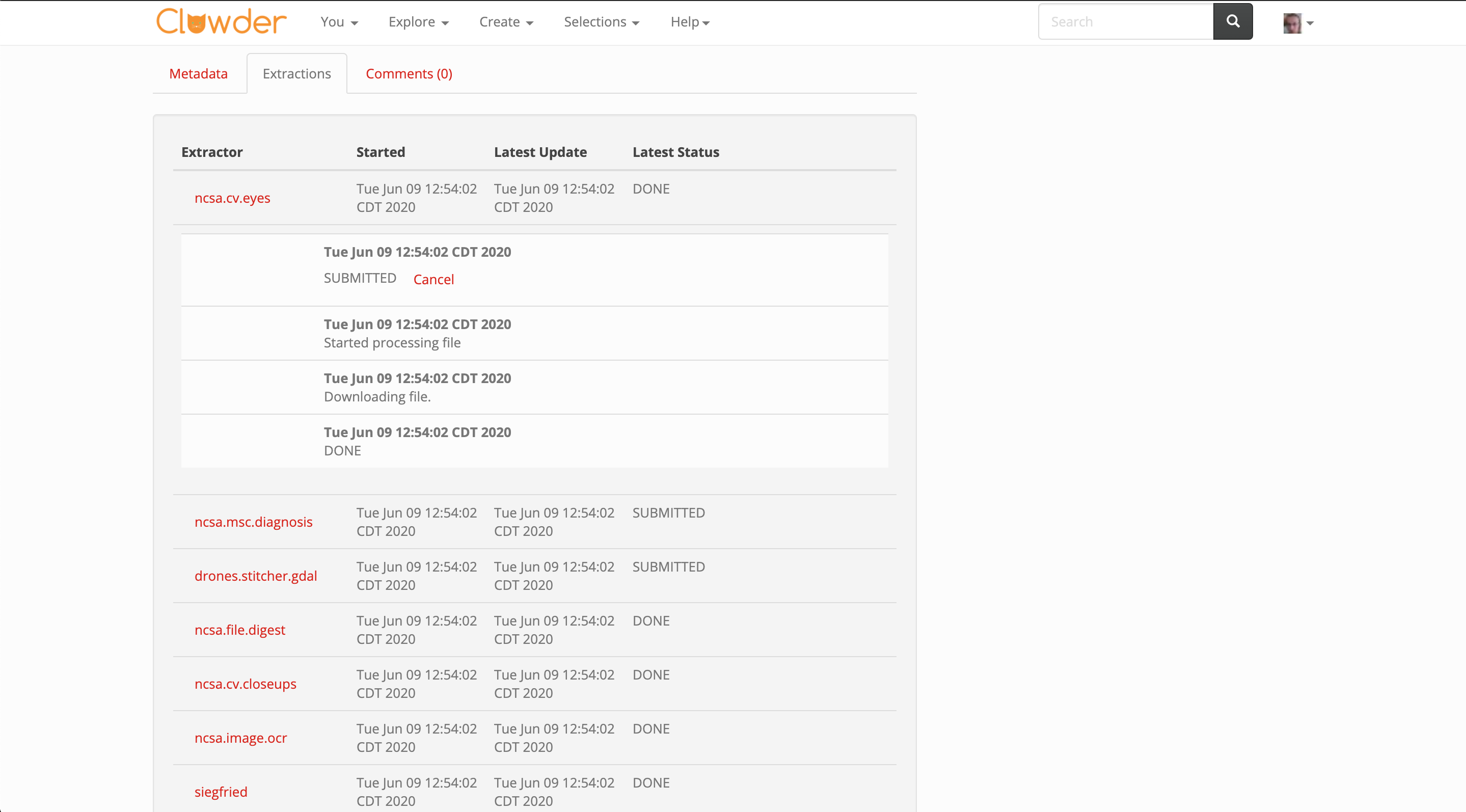

UI

The UI for viewing a File offers an Extractions tab, which lists out all Extraction objects associated with this file and groups by extractor type.

Changes Proposed

pyclowder

pyClowder may need minor updates to send more information regarding a specific job. Alongside the user, resource (e.g. dataset or file), and extractor name, pyClowder should also attach a unique identifier (e.g. UUID) to related job messages. That is, when an extractor begins working on a new item from the queue, it should assign a unique identifier to that workload that is unique to each run of the desired extractor.

This way, we can easily create a UI that can filter and sort through these events without making major modifications to the UI and without taking a large performance hit in the frontend.

API

The API changes proposed should be fairly minimal, as we are simply extending the existing Extraction API to account for the new identifier that will need to be added to pyClowder.

UI

The UI takes on the brunt of the changes here.

Displaying extractor events currently looks as follows:

We offer a tab that lists all extractors that have run on the file or dataset.

The user can click an extractor to expand a subsection containing individual events from that extractor.

We propose altering the UI with the following changes:

- Categorize further based on the unique identifier for each job - this allows user

- For finished extractor jobs (?), show the elapsed time it took to complete the job

Nice to have:

- Display the user that initiated the job - for new uploads, should we show the user who uploaded the file?

The final product might look something like this:

Open Questions

- What do we do about past extractions/jobs that are missing this identifier? Is it worth preserving the existing display in the UI for this purpose?

- Extraction already has an id field that is unique per-status update - would adding a job_id field work in a way that keeps this data/view backward-compatible as possible?