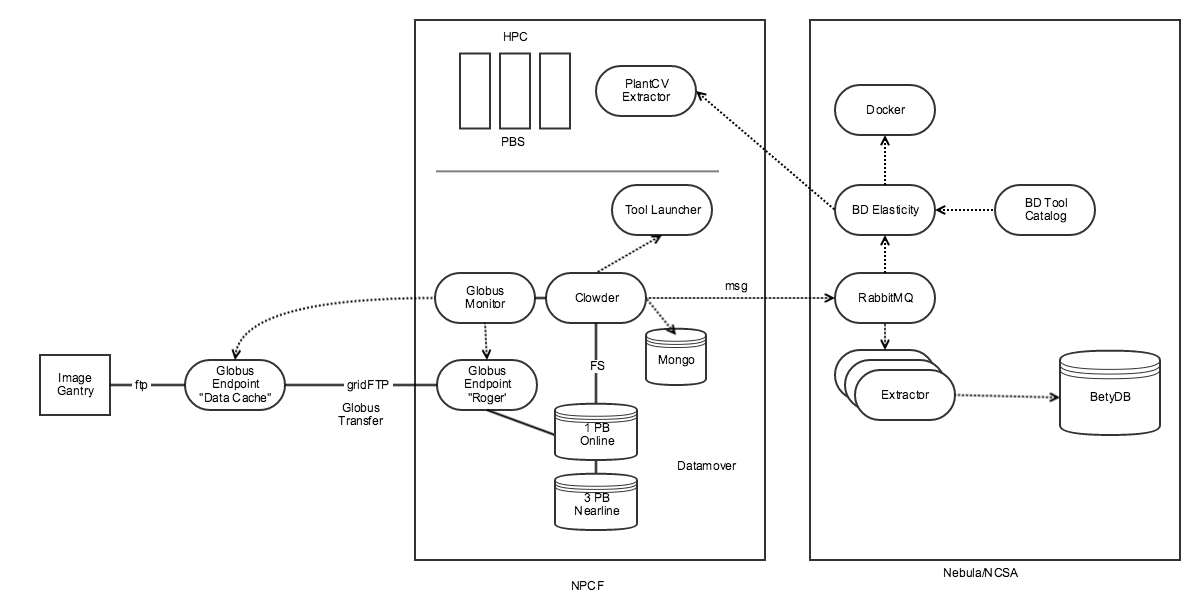

Terra

- 1TB per data image data

- Images are transferred from Gantry to "Data Cache" via FTP

- Images are transferred from Data Cache to Roger using Globus Transfer

- Globus monitor watches endpoints for transfer completions. Once complete, files are ingested into Clowder

- Files are stored on disk, metadata is stored in Mongo

- Clowder/Globus monitor/Tool Launcher are all run in OpenStack

- Storage: 1 PB online and 3 PB nearline

- CyberGIS HPC allocation is used for extractor "elasticity"

- Clowder uses centralized Rabbit MQ and Extractor bus, hosted at NCSA

- BrownDog elasticity module is capable of expanding capacity via Docker or PBS.

- Consider cases of Matlab licences (BYO) or WIndows VMs used by commercial partners

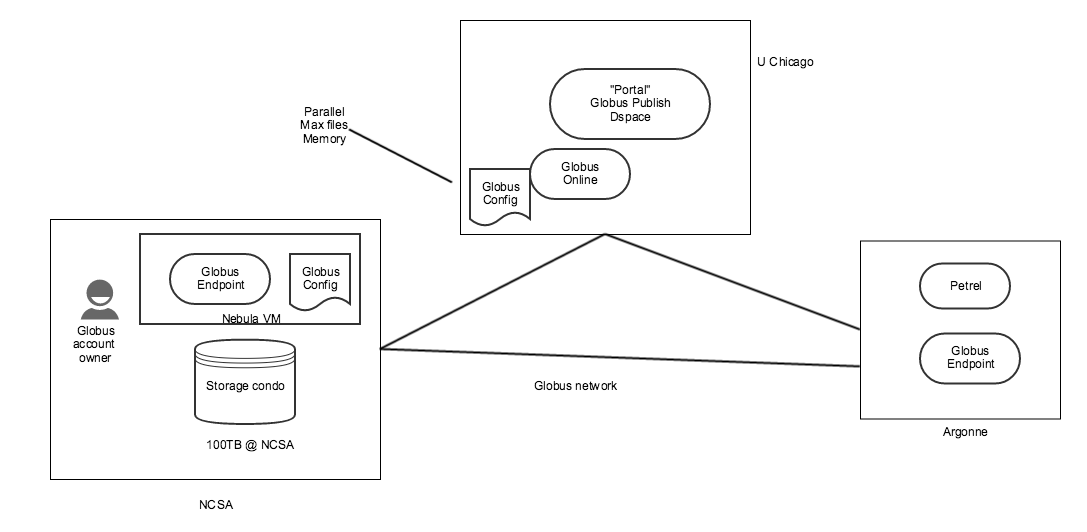

MDF

- 3yr pilot: submit raw data, exchange big data, network of globus endpoints with front end (DSpace), Petrel

- Administration: requires manual addition of users to endpoints for transfers

- Keep endpoint up and running

- Community outreach (get more data)

- Metadata schema (electron microscopy)

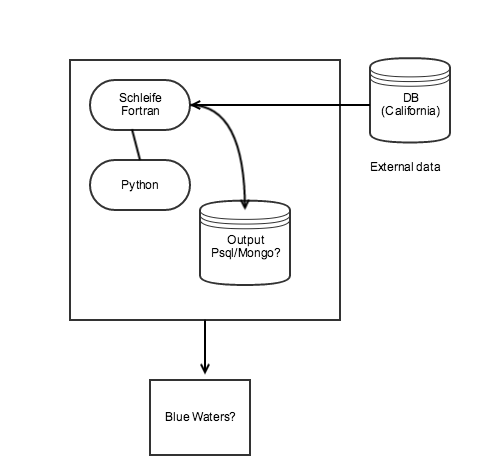

Developer environment:

- VM; 4CPU; TextWrangler + IntelliJ

- To be able to replicate Scheife process

HTRC

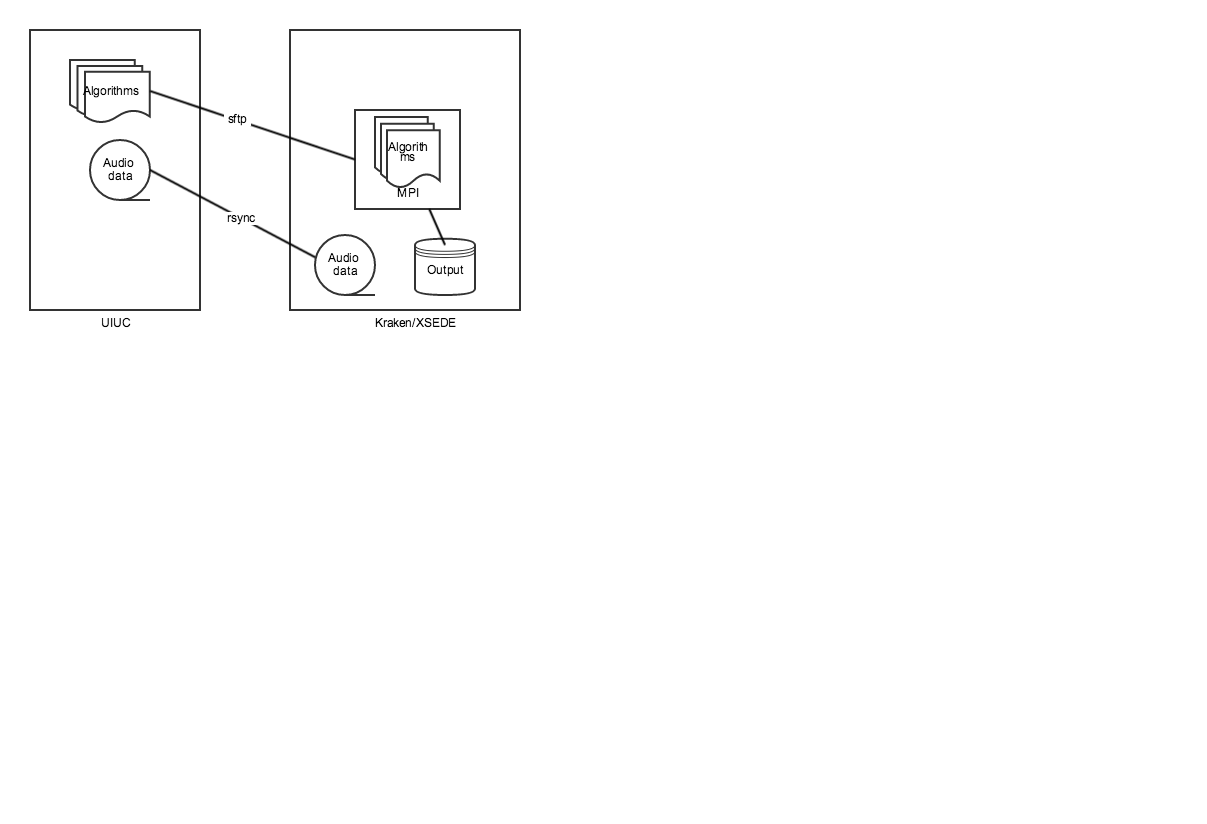

SALAMI

This is the basic workflow used for Stephen Downie's Structural Analysis of Large Amounts of Music Information (SALAM).

- Seven algorithms for music structure analysis were taken from Music Information Retrieval Exchange (MIREX) competition. These take a standard input (path to files) and produce a standard output (structure information), but could be written in a variety of languages.

- SALAMI had a 250K hour allocation on XSEDE via ICHASS, Kraken was used because of Matlab licensing.

- 252,169 songs, ~18,000 hours of audio, generated 1.8 million structure files

PSI