Goals

- NDS Share(d) datasets: present online the below datasets so that users can find and obtain them. Highlight DOI's of both paper and dataset.

- Provide analysis tools along with each dataset (ideally deployable data-side to avoid having to copy the data).

Presentation Logistics

- Booth demos: NCSA, SDSC, ...

- Draft list of specific individuals to invite to booths

- Create a flyer (address how we approached the problem? discuss tech options)

- SCinet late breaking news talk

Technology

- Globus Publish (https://github.com/globus/globus-publish-dspace)

- Modified DSpace with Globus Authentication, Groups, and Transfer

- Example instance: Materials Data Facility

- Requires a Globus endpoint on each resource (not available for Swift stores, e.g. SDSC Cloud)

- Can skin a collection (currently doesn't support skinning instance)

- Analysis currently not supported

- yt Hub

- Built on Girder with additional plugins to support Jupyter, ownCloud, ...

- Example skinned instance: Galaxy Cluster Merger Catalog

- Would need allocation on BlueWaters to run tools there. May need to move Moesta dataset elsewhere...

- Resolution Service

- Given DOI → get URL to data, get loction, get machine, get local path on machine

- Given notebook, location, path → run notebook on data at resource

- Allows independence from repo technologies

- Allow repos to provide location information as metatdata, if not available attempt to resolve (e.g. from URL, index)

- Repos that don't have local computation options would need to move data

- Only requirement from repos is that data can be accessed via a URL

- Identify at least one notebook for demonstration

- Build as service with python library interface that can be shown in Jupyter

- Create an alternative bookmarklet client that can be shown on any of repo

- click on link get list of resources to run a selected notebook on

- Discussed as a need within TERRA effort

Datasets

1. MHD Turbulence in Core-Collapse Supernovae

Authors: Philipp Moesta (pmoesta@berkeley.edu), Christian Ott (cott@tapir.caltech.edu)

Paper URL: http://www.nature.com/nature/journal/v528/n7582/full/nature15755.html

Paper DOI: dx.doi.org/10.1038/nature15755

Data URL: https://go-bluewaters.ncsa.illinois.edu/globus-app/transfer?origin_id=8fc2bb2a-9712-11e5-9991-22000b96db58&origin_path=%2F

Data DOI: ??

Size: 90 TB

Code & Tools: Einstein Toolkit, see this page for list of available vis tools for this format

The dataset is a series of snapshots in time from 4 ultra-high resolution 3D magnetohydrodynamic simulations of rapidly rotating stellar core-collapse. The 3D domain for all simulations is in quadrant symmetry with dimensions 0 < x,y < 66.5km, -66.5km < z < 66.5km. It covers the newly born neutron star and it's shear layer with a uniform resolution. The simulations were performed at 4 different resolutions [500m,200m,100m,50m]. There are a total of 350 snapshots over the simulated time of 10ms with 10 variables capturing the state of the magnetofluid. For the highest resolution simulation, a single 3D output variable for a single time is ~26GB in size. The entire dataset is ~90TB in size. The highest resolution simulation used 60 million CPU hours on BlueWaters. The dataset may be used to analyze the turbulent state of the fluid and perform analysis going beyond the published results in Nature doi:10.1038/nature15755.

2. Probing the Ultraviolet Luminosity Function of the Earliest Galaxies with the Renaissance Simulations - Christine Kirkpatrick can you fill in the missing pieces?

(Also available on Wrangler?)

Authors: Brian O'Shea (oshea@msu.edu), John Wise, Hao Xu, Michael Norman

Paper URL: http://iopscience.iop.org/article/10.1088/2041-8205/807/1/L12/meta;jsessionid=40CF566DDA56AD74A99FE108F573F445.c1.iopscience.cld.iop.org

Paper DOI: dx.doi.org/10.1088/2041-8205/807/1/L12

Data URL:

Data DOI: ??

Size: 89 TB

Code & Tools: Enzo

In this paper, we present the first results from the Renaissance Simulations, a suite of extremely high-resolution and physics-rich AMR calculations of high-redshift galaxy formation performed on the Blue Waters supercomputer. These simulations contain hundreds of well-resolved galaxies at z ~ 25–8, and make several novel, testable predictions. Most critically, we show that the ultraviolet luminosity function of our simulated galaxies is consistent with observations of high-z galaxy populations at the bright end of the luminosity function (M1600 ⩽ -17), but at lower luminosities is essentially flat rather than rising steeply, as has been inferred by Schechter function fits to high-z observations, and has a clearly defined lower limit in UV luminosity. This behavior of the luminosity function is due to two factors: (i) the strong dependence of the star formation rate (SFR) on halo virial mass in our simulated galaxy population, with lower-mass halos having systematically lower SFRs and thus lower UV luminosities; and (ii) the fact that halos with virial masses below ~2 x 10^8 M do not universally contain stars, with the fraction of halos containing stars dropping to zero at ~7 x 10^6 M . Finally, we show that the brightest of our simulated galaxies may be visible to current and future ultra-deep space-based surveys, particularly if lensed regions are chosen for observation.

3. Dark Sky Simulation

Authors: Michael Warren, Alexandar Friedland, Daniel Holz, Samuel Skillman, Paul Sutter, Matthew Turk (mjturk@illinois.edu), Risa Wechsler

Paper URL: https://zenodo.org/record/10777#.V_VvKtwcK1M, https://arxiv.org/abs/1407.2600

Paper DOI: http://dx.doi.org/10.5281/zenodo.10777

Data URL: https://girder.hub.yt/api/v1/collection/578501e0c2a5f40001cec1d6/download (https://girder.hub.yt/#collection/578501e0c2a5f40001cec1d6)

Data DOI: ??

Size: 31 TB

Code & Tools: https://bitbucket.org/darkskysims/darksky_tour/

The cosmological N-body simulation designed to provide a quantitative and accessible model of the evolution of the large-scale Universe.

4. ...

Design notes

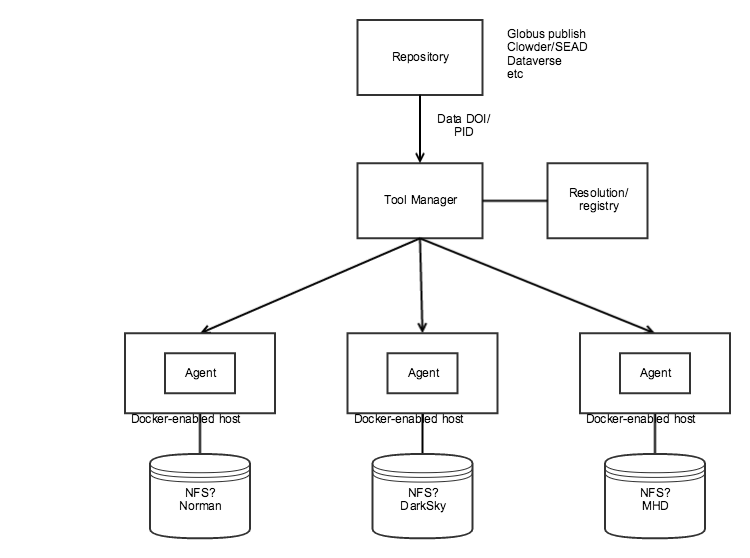

Photo of whiteboard from NDSC6

- On the left is the repository landing page for a dataset (Globus, SEAD, Dataverse) with a button/link to the "Job Submission" UI

- Job Submission UI is basically the Tool manager or Jupyter tmpnb

- At the top (faintly) is a registry that resolves a dataset URL to it's location with mountable path

- (There was some confusion whether this was the dataset URL or dataset DOI or other PID, but now it sounds like URL – see example below)

- On the right are the datasets at their locations (SDSC, NCSA)

- The user can launch a container (e.g., Jupyter) that mounts the datasets readonly and runs on a docker-enabled host at each site.

- Todo list on the right:

- Data access at SDSC (we need a docker-enabled host that can mount the Norman dataset)

- Auth – how are we auth'ing users?

- Container orchestration – how are we launching/managing containers at each site

- Analysis?

- BW → Condo : Copy the MHD dataset from Blue Waters to storage condo at NCSA

- Dataset metadata (Kenton)

- Resolution (registry) (Kyle)

Design Notes

Notes from discussion (Craig W, David R, Mike L) based on above whiteboard diagram:

What we have:

- "Tool Manager" demonstrated at NDSC6

- Angular UI over a very simple Python/Flask REST API.

- The REST API allows you to get a list of supported tools, post/put/delete instances of running tools.

- Fronted with a basic NGINX proxy that routes traffic to the running container based on ID (e.g., http://tooserver/containerId/)

- Data is retrieved via HTTP get using repository-specific APIs. Only Clowder and Dataverse are supported

- Docker containers are managed via system calls (docker executable)

- ytHub/tmpnb:

- The yt.hub team has extended Jupyter tmpnb to support volume mounts. They've created fuse mounts for Girder.

- PRAGMA PID service

- Demonstrated at NDSC6, appears to allow attaching arbitrary metadata to registered PID.

- Analysis

- For the Dark Sky dataset, we can use the notebook demonstrated by the yt team.

- For the Dark Sky dataset, we can use the notebook demonstrated by the yt team.

What we need to do:

- Copy MHD dataset to storage condo

- Docker-enabled hosts with access to each dataset (e.g., NFS) at SDSC, possibly in the yt DXL project, and in the NDS Labs project for MHD

- Decide whether to use/extend existing Tool Manager, yt/tmpnb or Jupyter tmpnp (or something else)

- Define strategy for managing containers at each site

- Simple: "ssh docker run -v" or use the Docker API

- Harder: Use Kubernetes or Docker Swarm for container orchestration. For example, launch a jupyter container on a node with label "sdsc"

- Implement the resolution/registry

- Ability to register a data URL with some associated metadata.

- Metadata would include site (SDSC, NCSA) and volume mount information for the dataset.

- The PRAGMA PID service looks possible at first glance, but may be too complex for what we're trying to do. It requires handle.net integration.

- Implement bookmarklet: There was discussion of providing some bookmarklet javascript to link a data DOI/PID to the "tool manager" service

- Authentication:

- TBD – how do we control who gets access, or is it open to the public?

- In the case of Clowder/Dataverse, all API requests include an API key

- Analysis:

- Need to get notebooks/code to demonstrate how to work with the MHD and Norman data.

Example case for resolution (not a real dataset for SC16)

- A dataset has a Globus Publish landing page https://publish.globus.org/jspui/handle/ITEM/113

- This dataset has the URL

- This would map to Nebula:

- /scratch/mdf/publication_113