http://www.ncsa.illinois.edu/news/story/ncsa_brown_dog_and_box_skills_speed_up_astronomical_research

Brown Dog, a prototype Data Transformation Service (DTS) developed at the National Center for Supercomputing Applications (NCSA) with support from the National Science Foundation (NSF) is partnering with Box to bring intelligence framework capabilities to users' content by leveraging its recently introduced Box Skills. With the release of one of our transformations as a preliminary Box Skill, Box users and scientists will soon be able to find images of similar galaxies based on a query galaxy image. Box Skills are not automatically enabled for all Box instances and the Galaxy Skill will have a limited initial rollout. The University of Illinois is planning to enable and configure the Galaxy Skill on the Campus instance. Other Universities or businesses who want to use this Skill will need to create their own Skill application. Anyone interested in having this Box Skill on their enterprise can reach out to the NCSA Brown Dog team at browndog-support@ncsa.illinois.edu for assistance in creating an instance of the Galaxy Skill which can be authorized on their instance of Box.

What is a Box Skill?

Box Skills are bits of code that operate on folders in Box. Apply them to a folder, and a Box Skill automatically analyzes each file placed into that folder using a machine learning algorithm, and then writes the output of its analysis as metadata on the file. For example, apply an audio Skill to a folder, place an audio file in that folder, and the Skill processes the audio file using a machine learning algorithm and then adds a transcript to that audio file. Users can then view the transcript when viewing the file in Box. The metadata information can drive other Box functionality, such as search. With the Galaxy Skill, Box scientists can upload an image of an unknown galaxy and have a set of possible galaxy matches returned.

The Galaxy Skill

The original tool which this was based upon was developed by the DES Labs for the Dark Energy Survey (DES) at NCSA. Brown Dog and Box are partnering to bring this technology, once reserved for mission-scale science, to Box users everywhere. This will create new opportunities for scientists and Box users to widely utilize machine learning tools to extract actionable data from images. An example of this skill in operation: Take the following images of galaxies captured by DES selected by a query image on the top left. This is just an example of the capabilities of this skill which is able to search among thousands of galaxy images.

How it works:

Using the above example, Box users can upload an image of a galaxy to Box, which is then sent to the Autoencoder Deep Learning model, which is pre-trained and running in the back end. The model then creates a one-dimensional compressed representation in a so-called “latent space” of reference for this new image and compares that to thousands of galaxy images in a database in that same space. Each one of the newly created floating points represents a distinct characteristic about the submitted image, such as roundness, brightness, size, orientation, etc.

The autoencoder also compresses these images from roughly 40,000 pixels (in three channels) down to about fifty floating points (roughly a 2000x compression), which vastly expedites the search process by using smaller sizes. Judging by similarities in the submitted galaxy image, the autoencoder will reveal its ‘Top 5 most similar galaxies.’ The images of the five similar galaxies are then displayed in the sidebar when previewing the galaxy image in Box.

What does this mean for the Box community?

The future goal for the next version of this skill is for users to be able to apply this same model to any set of images - plants, faces, galaxies, etc.- to quickly find similar images throughout a database.

Challenge:

To maintain a reliable and robust user technology that will produce an accurate ‘Top 5’ result, there is a continuous effort to update and maintain the deep learning model.

If you want to develop a Box Skill yourself, you can do so by building with the Box Skills Kit, a developer toolkit that makes it easy to create and configure Box Skills. Using the Box Skills Kit, you can apply the AI/ML technology of your choice to enhance data in Box. You can use any applicable third-party AI/ML service or your own internally-built AI/ML service. You can learn more about the Box Skills by visiting box.com/skills. Learn more about Brown Dog, Box Skills, and DES.

Started in 2013, the Predictive Ecosystem Analyzer (PEcAn) effort aims to enhance ecosystem science, policy, and management by informing them with the best data and models available. An essential part of this vision is the synthesis of existing data sources with ecosystem models. As ecosystem science has seen an explosion of available data and data types there is a need to be able to ingest and adapt data from a wide variety of sources. In collaboration with the Brown Dog effort the PEcAn and Brown Dog teams work to support this data ingestion, helping scientists keep pace with the rate at which the research community is generating new observations. One place PEcAn uses Brown Dog is to handle the processing of input data used to drive models, such as meteorological observations, from a raw source to the format that a particular ecosystem model needs. Because Brown Dog is a cloud service, it not only does this transformation, but does so without using the computational or storage capacity of the user’s machine, making data more accessible to anyone regardless of the computational capabilities of their machine. This is critical for PEcAn users, who often only need a small local portion of a large (TB or more) global data set, which allows PEcAn to be run on laptops from field, where both storage and bandwidth are limited, and in the classroom. The ability of Brown Dog to record data provenance is also key for PEcAn users to ensuring data can be tracked down, scrutinized, and reused whenever needed. The PEcAn team will continue to build tools around Brown Dog in order to synthesize more environmental data with all ecosystem models and in turn make these data ingestion tools available to the larger ecological community through Brown Dog towards promoting reproducible ecosystem modeling and forecasting.

To learn more about PEcAn visit: https://pecanproject.github.io . PEcAn is developed as open source software with source available here: https://github.com/PecanProject/pecan .

The Brown Dog project funds roughly a dozen Ph.D. and Masters students across its use cases to help drive activities within the supported scientific domains. Today we would like to congratulate Ankit Rai, Ph.D. who successfully defended his Ph.D. thesis this month. Ankit, working with Co-PI Barbara Minsker, has worked at the intersection of Informatics, Civil Engineering, and Social Science:

“My research work primarily addressed the limitation of current approach in studying landscape preferences by using advanced data science techniques. As a part of this work, a novel framework is created for identifying urban green storm water infrastructure (GI) designs (wetlands/ponds, urban trees, and rain gardens/bioswales) from high-resolution Google Earth images using state of the art computer vision and machine learning methods. The GI identification framework was also validated as an approach for collecting landscape preference data towards improving the understanding of what specific features are most desired. Previous research has shown that high-preference green settings are correlated with improved human health and well being. We further curated social media data using Twitter, Flickr, and Instagram to analyze GI preferences using qualitative codebook analysis and natural language processing techniques. The models and findings are implemented as Brown Dog services allowing others to leverage these tools as opposed to having to re-implement these capabilities within their research when using similar datasets”

As part of his research Ankit has developed a number of extractors to assign a green index to pedestrian routes based given path coordinates, automatically estimate human preference of landscapes given either images or text describing those landscapes, detect green infrastructure types within aerial images, as well as versions of these extractors capable of operating on data contained within social media feeds such as Twitter, Flickr, and Instagram.

To use these tools and more simply sign up for a Brown Dog account!:

Updated tutorial materials for the Brown Dog beta instance are now available here:

Brown Dog Tutorial - Beta Release

To facilitate users in the portions involving the creation of new transformation tools to be deployed in the Brown Dog Data Transformation Service (DTS) we have incorporated a new docker based utility that allows one to get going quickly, the BD Development Base:

https://opensource.ncsa.illinois.edu/bitbucket/projects/BD/repos/bd-base/

On your local machine all you need is git and docker (which you would need regardless), then simply type:

and you will be up and running with your own DTS instance (and all of its dependent sub-services). To startup a basic tool development environment automatically connected to this personal DTS instance then type:

We also provide a Virtual Machine containing these components and utilities to further facilitate users as they get started:

http://browndog.ncsa.illinois.edu/downloads/bd-base.ova

If interested in an onsite hands-on tutorial for your organization please let us know at browndog-support@ncsa.illinois.edu!

Created a new Brown Dog YouTube video highlighting a number of the client interfaces.

We had a paper accepted into IEEE Big Data this year. The paper goes over the architecture as well as the various components that make up Brown Dog. If you need to cite Brown Dog this is the paper to use:

S. Padhy, G. Jansen, J. Alameda, E. Black, L. Diesendruck, M. Dietze, P. Kumar, R. Kooper, J. Lee, R. Liu, R. Marciano, L. Marini, D. Mattson, B. Minsker, C. Navarro, M. Slavenas, W. Sullivan, J. Votava, K. McHenry, "Brown Dog: Leveraging Everything Towards Autocuration", IEEE Big Data, 2015

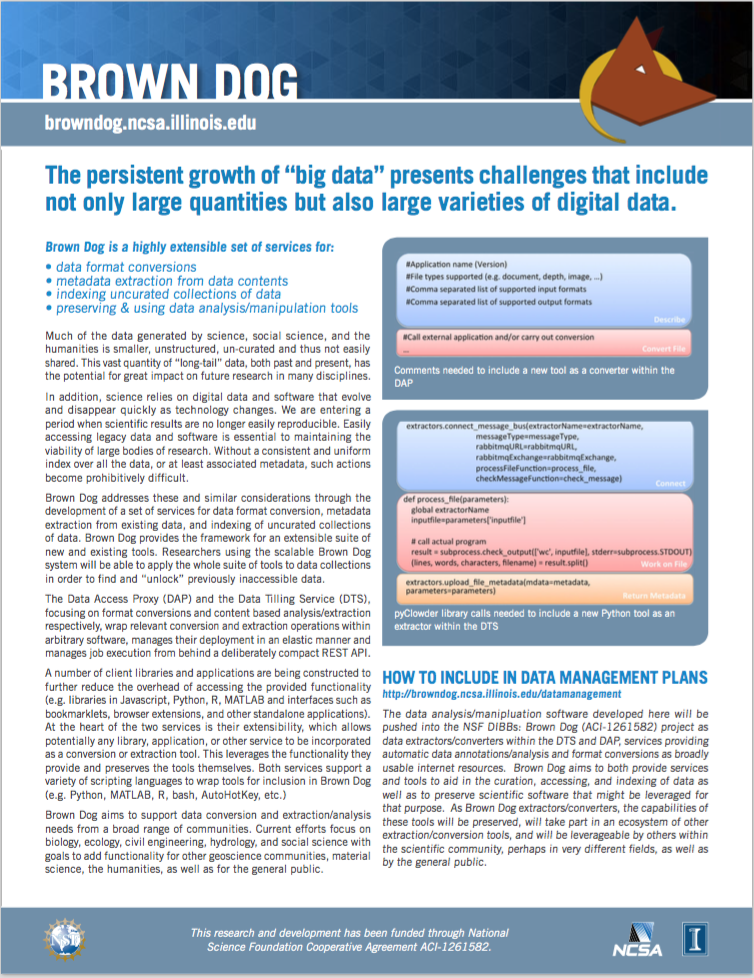

Moving into a friendly user mode we have begun to put together some new information resources to aid new users in using Brown Dog services. For example the Brown Dog flyer below also serves as a cheat containing the REST API for conversions/extractions and details on how to add data analysis/manipulation tools as new converters/extractors to extend Brown Dog's capabilities.

Modelling the DAP and DTS services to fill a role similar to that of a DNS, except for much more demanding operations over data, we often demonstrate Brown Dog via example client applications that utilize the services. In the posts below we show a number of examples of the DAP and DTS being used in general workflow systems such as DataWolf, specialized workflow systems such as PEcAn, content management/curation systems such as Clowder, a command line interface, a web interface, a Chrome extension, a bookmarklet, and from within HTML via a javascript library. In addition there is a python library with plans to also create interface libraries in R and Matlab. At its lowest level, however, the DAP and DTS are RESTful web services with an API that is directly callable from a wide variety of programming and scripting languages. For example the simple bash script below:

#!/bin/bash

url="http://dap-dev.ncsa.illinois.edu:8184/convert/$2/"

for input_file in `ls *.$1` ; do

output_url=`curl -u user1:password -s -H "Accept:text/plain" -F "file=@$input_file" $url`

output_file=${input_file%.*}.$2

echo "Converting: $input_file to $output_file"

while : ; do

wget -q --user=user1 --password=password -O $output_file $output_url

if [ ${?} -eq 0 ] ; then break ; fi

sleep 1

done

done

Our ultimate goal will be to include support for the DAP and DTS at the OS level and like the DNS, though largely invisible to the average user, be an essential part of the internet in terms of accessing, finding, and using data.

Here is a video of some of the capabilities we are adding to Brown Dog in support of our Critical Zone use case with Praveen Kumar at UIUC. Towards better understanding the human impacts to a regions hydrology we have added extraction tools to identify floodplains in lidar data and pull historical river from digitized maps:

Special thanks to Qina Yan (Ph.D. student in Civil & Environmental Engineering), Liana Diesendruk (Research Programmer), and Smruti Padhy (Research Programmer), Jong Lee (Senior Research Programmer), and Chris Navarro (Senior Research Programmer), for their work on these tools and for developing the DataWolf workflow.

Coming releases of PEcAn will come with the ability to utilize Brown Dog for data conversions to model specific formats (part of our Ecology use case with Mike Dietze at Boston University). In developments to come this will aid PEcAn in the usage of large data sets such as NARR. Below you can see an example of Brown Dog being used to process the data needed for the SIPNET model:

and for the ED model:

Special thanks to Betsy Cowdery (Ph.D. student in Earth Sciences), Edgar Black (Research Programmer), and Rob Kooper (Senior Research Programmer) for adding the needed conversions to Brown Dog and interfacing to PEcAn.

Added some some videos showing what we are doing for our Green Infrastructure use case with Barbara Minsker, Art Schmidt, and Bill Sullivan at UIUC. In an effort to study the human health impacts of our day to day environment a number of extraction tools were built and deployed to do things such as assign a green index to photographed scenes, estimate human preference, estimate human sentiment from written descriptions, and identify bodies of water in aerial photos. Below we show the extraction of green indices for various urban pathways:

the extraction of a human preference score estimate for photographed scenes:

the extraction of the writers sentiment for text descriptions of a given scene:

and for the extraction of areas containing bodies of water in aerial photos:

Special thanks to Ankit Rai (Ph.D. student in Informatics), Marcus Slavenas (Research Programmer), and Luigi Marini (Senior Research Programmer) for their development of these extractors and example workflows in Clowder.

Towards highlighting what can be done with the Brown Dog services we have built a number of clients (e.g. a javascript library, bookmarklets for the DAP and DTS, and a Chrome extension). We have added a couple more including a python library and a command line interface (shown below). In the first video we show a conversion to access a directory of Kodak Photo CD images then chaining that with an extraction call to pull information from the image. We emphasize that none of these capabilities reside locally but are pulled together from an extensible collection of tools distributed within the cloud.

In the next video we show how to use the extracted data to build an index over a collection of files and then perform a search to find similar data:

The Brown Dog Tools Catalog will serve as a means to both collect new conversion/extraction tools from the community while simultaneously serving as platform for finding and preserving such tools. We have populated the Tools Catalog with a handful of the Medici extractors and Polyglot conversion scripts to get it started:

Adding a new tool is a simple matter of registering the tool along with a very simple wrapper script that will allow either the DAP or DTS to make use of it. Below we show an example adding scripts to make use of PEcAn for ecological model conversions:

Working to add support for data conversions in support of ecological models via the tools developed in PEcAn. For example Ameriflux data to the netCDF CF standard used with PEcAn:

and converting that to the format needed by various models, e.g. SIPNET:

The DAP, built on top of NCSA Polyglot, chains conversion tools together within the "cloud" allowing one to jump from format to format as needed. The DAP will eventually support the intelligent moving of data/computation to handle large datasets (e.g. NARR) and support a variety of models within ecology (e.g. ED) as well as other domains.

Some videos of the new Brown Dog Google Chrome extension allowing the Data Tilling Service (DTS), based off of NCSA Medici, to be called on arbitrary pages in order to index collections of data. Note the text in the queries is not part of the page or images on the page but being extracted from the image contents using cloud hosted tools within the DTS, specifically the face detector within OpenCV and the Tesserct OCR engine. The DTS will host a suite of such tools and make it easy for users to add additional tools: