Updated tutorial materials for the Brown Dog beta instance are now available here:

Brown Dog Tutorial - Beta Release

To facilitate users in the portions involving the creation of new transformation tools to be deployed in the Brown Dog Data Transformation Service (DTS) we have incorporated a new docker based utility that allows one to get going quickly, the BD Development Base:

https://opensource.ncsa.illinois.edu/bitbucket/projects/BD/repos/bd-base/

On your local machine all you need is git and docker (which you would need regardless), then simply type:

and you will be up and running with your own DTS instance (and all of its dependent sub-services). To startup a basic tool development environment automatically connected to this personal DTS instance then type:

We also provide a Virtual Machine containing these components and utilities to further facilitate users as they get started:

http://browndog.ncsa.illinois.edu/downloads/bd-base.ova

If interested in an onsite hands-on tutorial for your organization please let us know at browndog-support@ncsa.illinois.edu!

Created a new Brown Dog YouTube video highlighting a number of the client interfaces.

We had a paper accepted into IEEE Big Data this year. The paper goes over the architecture as well as the various components that make up Brown Dog. If you need to cite Brown Dog this is the paper to use:

S. Padhy, G. Jansen, J. Alameda, E. Black, L. Diesendruck, M. Dietze, P. Kumar, R. Kooper, J. Lee, R. Liu, R. Marciano, L. Marini, D. Mattson, B. Minsker, C. Navarro, M. Slavenas, W. Sullivan, J. Votava, K. McHenry, "Brown Dog: Leveraging Everything Towards Autocuration", IEEE Big Data, 2015

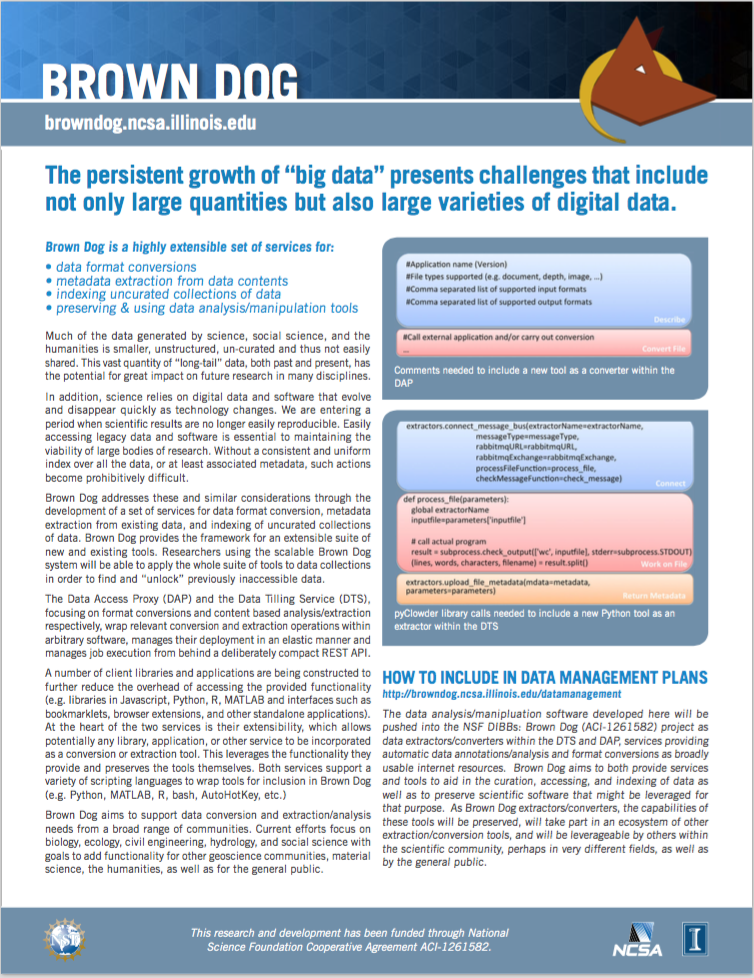

Moving into a friendly user mode we have begun to put together some new information resources to aid new users in using Brown Dog services. For example the Brown Dog flyer below also serves as a cheat containing the REST API for conversions/extractions and details on how to add data analysis/manipulation tools as new converters/extractors to extend Brown Dog's capabilities.

Modelling the DAP and DTS services to fill a role similar to that of a DNS, except for much more demanding operations over data, we often demonstrate Brown Dog via example client applications that utilize the services. In the posts below we show a number of examples of the DAP and DTS being used in general workflow systems such as DataWolf, specialized workflow systems such as PEcAn, content management/curation systems such as Clowder, a command line interface, a web interface, a Chrome extension, a bookmarklet, and from within HTML via a javascript library. In addition there is a python library with plans to also create interface libraries in R and Matlab. At its lowest level, however, the DAP and DTS are RESTful web services with an API that is directly callable from a wide variety of programming and scripting languages. For example the simple bash script below:

#!/bin/bash

url="http://dap-dev.ncsa.illinois.edu:8184/convert/$2/"

for input_file in `ls *.$1` ; do

output_url=`curl -u user1:password -s -H "Accept:text/plain" -F "file=@$input_file" $url`

output_file=${input_file%.*}.$2

echo "Converting: $input_file to $output_file"

while : ; do

wget -q --user=user1 --password=password -O $output_file $output_url

if [ ${?} -eq 0 ] ; then break ; fi

sleep 1

done

done

Our ultimate goal will be to include support for the DAP and DTS at the OS level and like the DNS, though largely invisible to the average user, be an essential part of the internet in terms of accessing, finding, and using data.

Here is a video of some of the capabilities we are adding to Brown Dog in support of our Critical Zone use case with Praveen Kumar at UIUC. Towards better understanding the human impacts to a regions hydrology we have added extraction tools to identify floodplains in lidar data and pull historical river from digitized maps:

Special thanks to Qina Yan (Ph.D. student in Civil & Environmental Engineering), Liana Diesendruk (Research Programmer), and Smruti Padhy (Research Programmer), Jong Lee (Senior Research Programmer), and Chris Navarro (Senior Research Programmer), for their work on these tools and for developing the DataWolf workflow.

Coming releases of PEcAn will come with the ability to utilize Brown Dog for data conversions to model specific formats (part of our Ecology use case with Mike Dietze at Boston University). In developments to come this will aid PEcAn in the usage of large data sets such as NARR. Below you can see an example of Brown Dog being used to process the data needed for the SIPNET model:

and for the ED model:

Special thanks to Betsy Cowdery (Ph.D. student in Earth Sciences), Edgar Black (Research Programmer), and Rob Kooper (Senior Research Programmer) for adding the needed conversions to Brown Dog and interfacing to PEcAn.

Added some some videos showing what we are doing for our Green Infrastructure use case with Barbara Minsker, Art Schmidt, and Bill Sullivan at UIUC. In an effort to study the human health impacts of our day to day environment a number of extraction tools were built and deployed to do things such as assign a green index to photographed scenes, estimate human preference, estimate human sentiment from written descriptions, and identify bodies of water in aerial photos. Below we show the extraction of green indices for various urban pathways:

the extraction of a human preference score estimate for photographed scenes:

the extraction of the writers sentiment for text descriptions of a given scene:

and for the extraction of areas containing bodies of water in aerial photos:

Special thanks to Ankit Rai (Ph.D. student in Informatics), Marcus Slavenas (Research Programmer), and Luigi Marini (Senior Research Programmer) for their development of these extractors and example workflows in Clowder.

Towards highlighting what can be done with the Brown Dog services we have built a number of clients (e.g. a javascript library, bookmarklets for the DAP and DTS, and a Chrome extension). We have added a couple more including a python library and a command line interface (shown below). In the first video we show a conversion to access a directory of Kodak Photo CD images then chaining that with an extraction call to pull information from the image. We emphasize that none of these capabilities reside locally but are pulled together from an extensible collection of tools distributed within the cloud.

In the next video we show how to use the extracted data to build an index over a collection of files and then perform a search to find similar data:

The Brown Dog Tools Catalog will serve as a means to both collect new conversion/extraction tools from the community while simultaneously serving as platform for finding and preserving such tools. We have populated the Tools Catalog with a handful of the Medici extractors and Polyglot conversion scripts to get it started:

Adding a new tool is a simple matter of registering the tool along with a very simple wrapper script that will allow either the DAP or DTS to make use of it. Below we show an example adding scripts to make use of PEcAn for ecological model conversions:

Working to add support for data conversions in support of ecological models via the tools developed in PEcAn. For example Ameriflux data to the netCDF CF standard used with PEcAn:

and converting that to the format needed by various models, e.g. SIPNET:

The DAP, built on top of NCSA Polyglot, chains conversion tools together within the "cloud" allowing one to jump from format to format as needed. The DAP will eventually support the intelligent moving of data/computation to handle large datasets (e.g. NARR) and support a variety of models within ecology (e.g. ED) as well as other domains.

Some videos of the new Brown Dog Google Chrome extension allowing the Data Tilling Service (DTS), based off of NCSA Medici, to be called on arbitrary pages in order to index collections of data. Note the text in the queries is not part of the page or images on the page but being extracted from the image contents using cloud hosted tools within the DTS, specifically the face detector within OpenCV and the Tesserct OCR engine. The DTS will host a suite of such tools and make it easy for users to add additional tools:

Added some higher resolution videos of the DAP bookmarklet being used for images:

for documents:

for 3D data:

and for archive/container files (e.g. zip, rar):

Added a couple higher resolution videos of the dap.js library which allows one to change formats of data within HTML so that they are more broadly viewable over the web. An example within links converting a JPEG-2000 and SID image to JPEG and a PNG image to XML via the opensource Daffodil implementation of DFDL:

and an example within image tags:

Initial deployment of the DAP and DTS was done this week. A set of tests, testing a subset of conversions and data extractions, is run every 2 hours to ensure that they are up, usable, and providing a minimal subset of capabilities. The status of the two services can be seen here:

http://browndog.ncsa.illinois.edu/status.html

Low values are desired, indicating both that the tests are completing and the test requests are being handled quickly. The tests being run are currently in the Medici and Polyglot repositories respectively:

https://opensource.ncsa.illinois.edu/stash/projects/POL/repos/polyglot/browse/src/main/web/dap/tests/tests.txt

https://opensource.ncsa.illinois.edu/stash/projects/MMDB/repos/medici-play/browse/scripts/web/tests/tests.txt

Next steps in the deployment include deploying the VM elasticity components for the two services, including more tests, and allowing the tests to be run asynchronously so one can see the effect of the additional extractors/converters.